While some of this information is accurate, I have one main issue with this configuration: with Traefik running in non swarm mode, it is unable to detect containers running on other swarm nodes.

2021 March 14

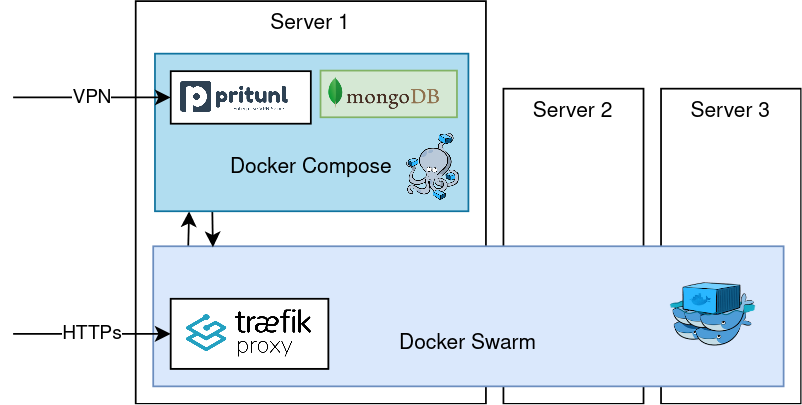

In the last weeks I’ve been deploying a set of new services in three servers. As I want to put every service as independent as possible from others, I decided to go Docker, and for their orchestration I chose Swarm. As far as I can tell, it looks like there is some trend on leaving Swarm for Kubernetes, but being ignorant on both technologies, and given the nice tutorials available at https://dockerswarm.rocks/, I decided to go Swarm.

I set the three servers running smoothly, following the tutorial from Docker Swarm Rocks. I used Traefik Proxy to keep the images accessible from the outside world, and installed other useful software I will be using, like MINio, an S3-like software, and SwarmPit to keep an eye on the swarm. Other services will me installed, like Databases and other local containers, that are not relevant at the moment.

As I need an external machine to access a MySQL database siting in the Swarm, and I did not want to expose its ports to the outside world, I decided to set up a VPN. For that, I used Pritunl, that runs on top of OpenVPN, and has a nice web interface for account manipulation.

My main problem was when I noticed Pritunl requires direct access to the machine hardware, so running in privileged mode, and that it is not possible to set up a privileged container in a Swarm.

The remaining of this article explains the changes I made after following the Docker Swarm Rocks awesome tutorial to put everything working smoothly.

I must stress out that I am not a Docker guru. I am learning, and just decided to share the few I know, in the hope of it being useful to someone else. If you spot any mistake, please let me know, so I can fix it.

Network

When creating the network suggested by the Traefik tutorial on Docker Swarm Rocks, it is created as a Swarm-only network. This does not allow containers from outside the swarm to access the swarm (and vice-versa).

Thus, one of the first tasks, is to stop every stack you have running on that swarm, delete the network (usually called traefik-public) and recreate it as attachable:

docker network rm traefik-public

docker network create --driver=overlay --attachable traefik-public --subnet 10.0.7.0/24In the code above, not that you must specify it as an overlay network. The subnet is not optional. You need it, so you know what route to add in the Pritunl web interface, to allow clients to access your swarm network.

Traefik Configuration

Traefik is great because it keeps an eye on the containers that are running, and their labels. These labels are used by Traefik to decide which ports to share, with which domain, to automatically create one Let’s Encrypt certificate, etc.

Unfortunately Traefik can only watch for either swarm instance labels (on running containers), or on container labels. If you chose the first, Traefik will not see containers outside the Swarm. If you chose the second, Traefik will not see the Swarm containers unless you change the way labels are associated with them, as Docker Swarm Rocks tutorial uses instance labels.

Thus, the first change is to make Traefik aware of container labels, and convert all instance labels into container labels.

version: '3.6'

services:

traefik:

image: traefik:v2.4.5

ports:

- 80:80

- 443:443

deploy:

placement:

constraints:

- node.labels.traefik-public.traefik-public-certificates == true

labels:

traefik.enable: "true"

traefik.docker.network: traefik-public

traefik.constraint-label: traefik-public

traefik.http.middlewares.admin-auth.basicauth.users: "admin:$$apr1$$xxxxx$$xxxxxxxx"

traefik.http.middlewares.https-redirect.redirectscheme.scheme: https

traefik.http.middlewares.https-redirect.redirectscheme.permanent: "true"

traefik.http.routers.traefik-public-http.rule: Host(`traefik.africamediaonline.com`)

traefik.http.routers.traefik-public-http.entrypoints: http

traefik.http.routers.traefik-public-http.middlewares: https-redirect

traefik.http.routers.traefik-public-https.rule: Host(`traefik.africamediaonline.com`)

traefik.http.routers.traefik-public-https.entrypoints: https

traefik.http.routers.traefik-public-https.tls: "true"

traefik.http.routers.traefik-public-https.service: api@internal

traefik.http.routers.traefik-public-https.tls.certresolver: le

traefik.http.routers.traefik-public-https.middlewares: admin-auth

traefik.http.services.traefik-public.loadbalancer.server.port: 8080

volumes:

- /var/run/docker.sock:/var/run/docker.sock:ro

- ./traefik-public-certificates:/certificates

command:

- --providers.docker

- --providers.docker.constraints=Label(`traefik.constraint-label`, `traefik-public`)

- --providers.docker.exposedbydefault=false

- --entrypoints.http.address=:80

- --entrypoints.https.address=:443

- --certificatesresolvers.le.acme.email=x@xxx.com

- --certificatesresolvers.le.acme.storage=/certificates/acme.json

- --certificatesresolvers.le.acme.tlschallenge=true

- --accesslog

- --log

- --api

- --serversTransport.insecureSkipVerify=true

networks:

- traefik-public

volumes:

traefik-public-certificates:

networks:

traefik-public:

external: true

From the code above, most is similar to the proposed code from Docker Swarm Rocks. Take note of the following changes (i) labels are not inside the deploy section, but directly under the service. Also, labels were converted from a list to a dictionary. While I do not think that is a requirement, I prefer them this way. (ii) in the command parameters, the option providers.docker.swarmmode was removed, so Traefik doesn’t watch only swarm containers. (iii) the command parameter serversTransport.insecureSkipVerify was added, so that Traefik doesn’t try to validate the Pritunl self-signed certificate.

Pritunl Configuration

The Pritunl configuration is based on their default docker-compose file, with some minor changes.

version: '3.9'

services:

mongo:

image: mongo:3.2

volumes:

- /srv/pritunl/mongo-data:/data/db

networks:

- pritunl-net

pritunl:

build: .

depends_on:

- mongo

ports:

- "1194:1194/udp"

privileged: true

environment:

- REVERSE_PROXY=true

- WIREGUARD=true

networks:

- pritunl-net

- traefik-public

labels:

traefik.enable: true

traefik.docker.network: traefik-public

traefik.constraint-label: traefik-public

traefik.http.routers.pritunl-http.rule: Host(`pritunl.xxx.com`)

traefik.http.routers.pritunl-http.entrypoints: http

traefik.http.routers.pritunl-http.middlewares: https-redirect

traefik.http.routers.pritunl-https.rule: Host(`pritunl.xxx.com`)

traefik.http.routers.pritunl-https.entrypoints: https

traefik.http.routers.pritunl-https.tls: true

traefik.http.routers.pritunl-https.tls.certresolver: le

traefik.http.services.pritunl.loadbalancer.server.port: 443

traefik.http.services.pritunl.loadbalancer.server.scheme: https

networks:

traefik-public:

external: true

pritunl-net:

ipam:

config:

- subnet: 10.10.20.0/24

Regarding this configuration, the relevant parts are the Traefik config (that are similar to all other sites we want to make available through it). In the networks, add the traefik-public, so that clients connected to the VPN are able to access it. Note that this is only possible because at the creation of the network the attachable option was used. Regarding the internal network used by the Mongo and Pritunl containers, I just defined a specific range, so I know it will not collide with other networks I have in the same machine. Note also that I removed the exposition of ports 80 and 443, as they will be reachable through Traefik. The only opened port is the one used by the VPN clients.

Accessing Containers from VPN

One of the main problems is how to have clients of the VPN connecting to specific containers. One option would be to have static IP configuration. Unfortunately, that is not yet supported in Docker Swarm (and will never be, probably). The current solution is not the one I like the most, but works. I have a cronjob inspecting the docker containers that are relevant for me, and adding their IPs on a DuckDNS.org account.

There are some projects to create a docker image that acts as a DNS provider. That might be an option, but at the moment I opted to go with the simpler solution.

Conclusions

It is not easy to find this kind of discussion in the internet and forums. I managed to get to this working structure after struggling for a few days. As stated above, this might have some mistakes. I am not a proficient user of Docker, so most was achieved by try and error.

Finally, if you find anything that is not properly explained, any mistake, or any other thing I can complete this post with, please drop me a line.

Changelog:

2021.03.14: Given the lack of support to static IPs, I’ve added a section on using a Dynamic DNS provilder, like DuckDNS.